Thinking About Agents

Agents

Sometimes they're good. Sometimes they're bad.

Some good properties of agents are:

- They live in your environment. You don't like it? Turn it off.

- They are generally simpler pieces of software. Most servers dwarf agents for complexity.

- Generally they're less computationally intensive, particularly if you break problems into smaller chunks you swarm them over a task

Some bad things about agents:

- They're often someone else's software running in your environment. We do that a lot more than we did, but we're still working out the details on trusting software we get from strangers

- There often run with elevated privileges, so while your overall system might not have security implications your agents probably do

- Becuase agents are one step removed from everything else in your system they have to be very self-sufficient. For example, they can't just consume all the memory on a system.

What that means is that agent design is an art form even more than most things in tech are already an art-form. So witting an agent requires some decisions that are worth writing down.

Deployment

The first agent decision is deployment. How to get the agent to the right place. In Privay's case there are a few different places that the agent can go. This is mostly based on the things that Privay needs to look at. Right now I can think of a few places Privay needs to look at:

- Databases

- Slack

- S3 Buckets

Each of those has slightly different deployment profiles. Slack is on Slack's servers. Email is probably in the cloud via Microsoft or Google but could be on-premise or sometimes in third party stores like Postmark. S3 and most (not all) databases are in cloud environments.

Given that, you're looking for something flexible. Shipping a binary is out because Slack and email services are most likely not going to allow random binaries to be executed on their platforms. That also frees me from making decisions about OSes like do I wish to make an OS X executable. So from there you have a few options. I went with docker containers. The main reason being just how straightforward they are to deploy in cloud environments and that for on-prem environments it's plausible to deploy docker via things like VMWare Photon. In later iterations the ability to make a container that's suitable for Kubernetes is also there. Although that requires some thought as my experience of Kubernetes is that it's easy to make a crappy container inside Kubernetes and much harder to make a good container.

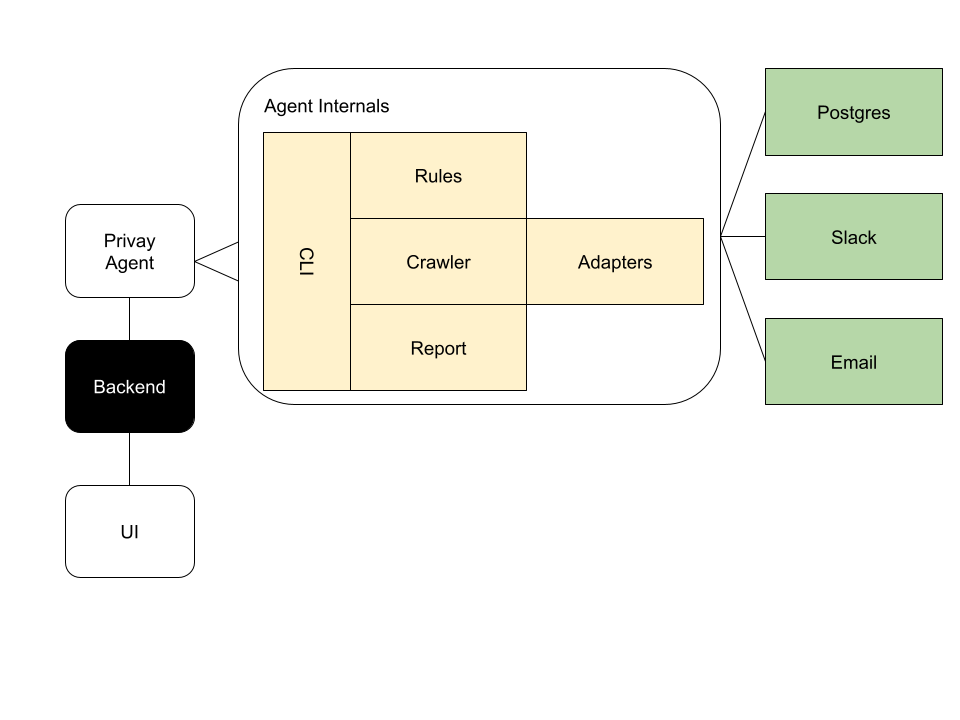

That leads to a logical architecture that looks something like this:

Threat Modelling

Once you have an architecture diagram like the one above then you can move to put in place some threat modelling. Threat modelling is great, it allows teams and individuals to go through lists of possible issues and decide if they apply to them. I'm using the STRIDE model through a card game called Elevation Of Privilege.

It is definitely more fun doing this in a group but right now I want to make sure that Privay's architecture has good security built in from the start. So that means doing the threat modelling on my own.

First Identified Threat

Almost immediately I get:

Well that's pretty much straight in the wheelhouse of agents. You have an agent, it needs to know:

- If it gets a request (particularly to perform some action) that the request comes from Privay's back-end.

- That request is tied to a user that Privay knows about.

I'm going to go around this a few times, because it's a critical part of agent security but there's a first step to the first issue and that is to authenticate between machines. So how do I make sure that a request is coming from Privay? There are a few approaches, some more popular than others.

- Bearer Tokens

- MTLS

- Encrypted message exchange

Privay's back-end must communicate with Privay's agents (given the number of times I'm going to end up talking about the Privay back-end and agents I'm just going to use back-end and agent from here). It's important to note the direction of that. The agent gets communications from the back-end. In a web application normally it's the other way around. The back-end must check that the incoming request is authenticated. But here, to supply configuration and actions the back-end must talk to the agent and convince the agent it is entitled to do that. I can see value in all of the approaches. Because each has it's own issues. But I'm going to start with bearer tokens. Bearer tokens are relatively easy to do because they piggyback off requests. Got an incoming request? Put the bearer token on the request and check it on the way through. Now remember, there are problems with bearer tokens, they are point in time, so a bearer token can stop being valid and you have no way to knowing unless you check again. This can make an agent quite chatty, in that the regular checks require regular comms out form the agent. So you can't rely on bearer tokens alone. Also if the bearer token in not refreshed frequently you're in danger of having the token stolen and used by an adversary.

As it happens auth0 has this covered using JWTs for what they call Machine to Machine (M2M) Authorization. So I'm using that. I'll let you know how I go :smile: